Have you ever wondered how you get new and updated content every time you search for something on a search engine? This happens because search engines send robots to explore web pages, and crawl depth decides how far they can go. But why should we know about it? What are its benefits? How does it work? Crawl depth is an important SEO metric and an essential aspect of website management that is often overlooked. Since search engine bots explore and index site pages at depth, it is crucial to optimize and control crawl depth to manage website performance and search engine ranking of pages. In this blog post, we’ll explore why optimizing crawl depth is crucial. It ensures that your most important content is regularly crawled and indexed swiftly, ultimately enhancing the user experience.

What is crawling and indexing?

As of 2024, there are about 1.09 billion websites on the internet. However, not all the websites can be crawled by search engines. Therefore, designing your website in such a way that it can be crawled becomes crucial to increase the visibility of your webpage. Crawling refers to the process in which search engine bots(web crawlers or spiders) crawl website URLs to discover content types in the form of video, images, text, and other file types. The XML generator tool helps sitemaps find the on-site pages that may not be found in the general crawling process. Indexing is the process by which search engines organize the information found on pages. This information is then stored in the search engine database, so your website will appear on the search result page whenever a user searches for a relevant query. The Google Webmaster tool helps crawl, index, and monitor the performance of your website. For a detailed explanation of crawling and indexing, you can refer to the blog – ultimate guide to crawling and indexing.

What is crawl depth?

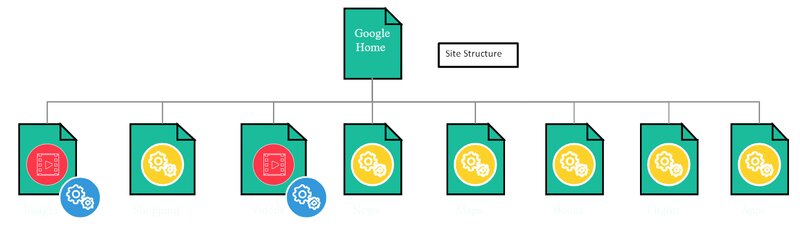

Crawl depth refers to the number of links a search engine’s bot will access and index on a site during a crawl. It is also referred to as click depth, which is the number of clicks a user must perform to get to the same page. There are four levels of depth usually identified. They are the home page, main categories, subcategories, and products. It is recommended not to have more levels of depth because that could make navigation more difficult for users. Crawl depth is an important strategy for improving SEO ranking of a website since it allows users to know the structure of a web page. A good website structure helps users navigate websites and enhance user experience. Site architecture is important for users to navigate easily. Crawl depth indexes content available on search engines, improving user experience and website visibility.

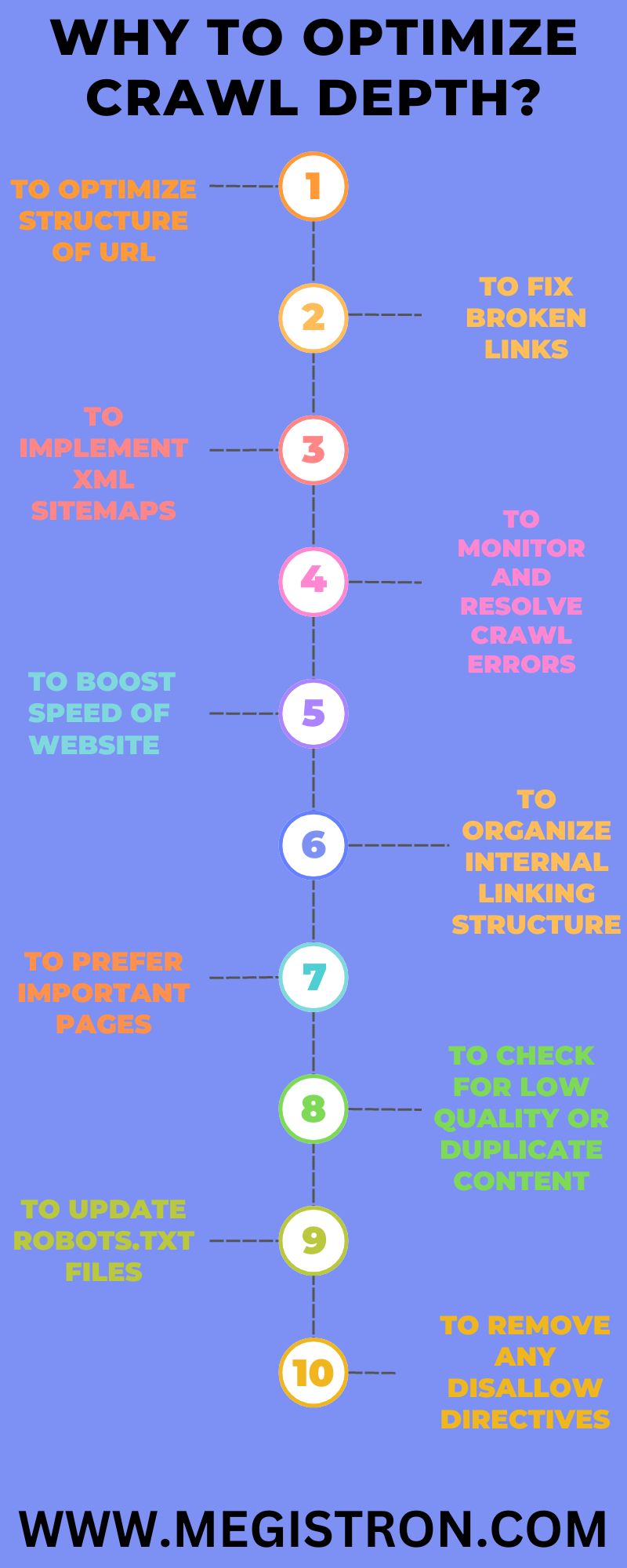

Why to optimize crawl depth?

- To optimize structure of URL

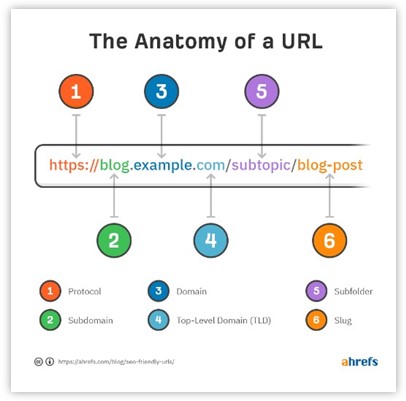

A well-optimized URL can contribute to a more efficient crawl process. You can optimize URL by following certain standards, which are:-- To use a standard URL structure

- The website URL name should have the company name

- Use hyphens between two words so that search engines understand that there are two separate words

- To take advantage of keywords

- Removing unnecessary words and spaces.

Clear and concise URLs reflect the content and hierarchy of a page, which can aid search engine crawlers in understanding the structure of a website so that it can index content for a better user experience and increase the website’s ranking. The below image from Ahrefs highlights the anatomy of a URL.

- To fix broken links

Broken links are dead links. Broken links negatively impact the SEO of websites by causing search engine crawlers to face problems when indexing website content. When search engine crawlers crawl the website and encounter broken links, they believe it must be properly designed and maintained. They also show a 404 error when someone tries to open the webpage. Search engines prioritize user experience. As a result, websites with broken links can lose credibility and authority, leading to reduced website traffic and lower website ranking on the SERPs. Therefore, it is crucial to check and fix broken links with utmost priority regularly. Or you can build new links for SEO with existing partners to increase the site’s visibility.

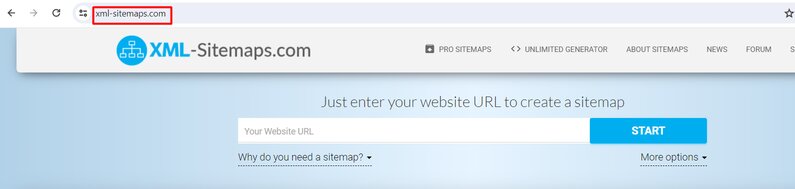

- To implement XML sitemaps

According to Google, a sitemap is a file where you provide information about the pages, videos, and other files on your site and the relationship between them. So, a sitemap helps users navigate through your website and search engine to crawl websites efficiently. Sitemaps are especially vital for extensive websites with abundant content spread across numerous pages. They play a crucial role in ensuring search engines take notice of all those pages.

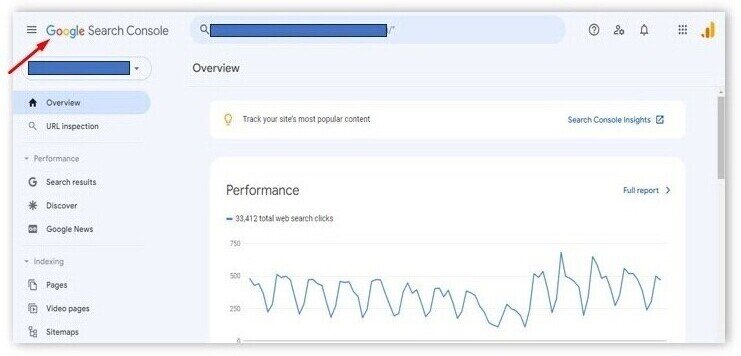

- To monitor and resolve crawl errors

A crawl error occurs when a search engine crawler fails to access, index, or render from your web pages. It can negatively affect your search performance and user experience, so it is crucial to identify them and fix them promptly. Rendering in SEO is another process done by search bots. It is done to understand and assess page loading time, the type of content that is available on the website, and what kind of links are present on the website. This helps search engine crawlers identify a website’s overall performance according to which search engine ranks your page. You can use Google Search Console, Ahrefs tools, Moz tools, and SEMrush tools to find and fix crawl errors. These tools can crawl websites and provide insight such as crawl depth, duplicate content, structured data and more. By using these tools, you can audit your website structure, resolve server issues, and redirect pages to improve your website’s SEO performance.

- To boost speed of website

According to Google, the probability of bounce rate increases by 32% as page load time goes from 1 second to 3 seconds. The speed of the page is a key metric to determine your website’s conversion rate. You can use the page speed insight free tool provided by Google to know your page speed. If your site doesn’t load within the stipulated time, search engine crawlers will leave your website, which means your website will not be crawled and not indexed. If your site speed is slow, you can check web vitals reports to find out the issue behind it. Google Lighthouse is a free tool that can refine information about your goal.

- To organize internal linking structure

Internal links are those links that point to one page from another page within a website. It helps users and search engine crawlers navigate through your website, which helps them discover, index, and render your website content. According to search engine algorithms, if a page or website gets high-quality links in an abundant amount, it means that it contains useful content on the page or site. Internal linking structure is a part of link building SEO. Link building refers to how you acquire high-quality links from authoritative websites to increase the visibility and credibility of your website on SERP. By creating a logical structure of the site, you can link those content that don’t have sufficient internal linking to your website. If you have recently gone through site migration, bulk deletion, or structure change, do not link your content with obsolete internal links. Remember, content is always king in the digital marketing world. So make sure to add internal links to your highly appreciated and visited content to increase your page’s search engine result page(SERP) and distribute internal links through your website so that all pages receive sufficient links.

- To prefer important pages

Important pages such as the homepage, about page, contact page, and updated content page should use shallow crawl depth. A shallow crawl depth page refers to a page that can be easily accessed from the homepage without further clicks. The website’s content should be optimized so that it can be easily navigated on the website. This will increase the overall SEO performance of the website as the page depth crawler will crawl to all important pages to sort data of multiple information.

- To check for low quality or duplicate content

Duplicate content is content that appears on more than one page on the web or content that has a lot of spelling and grammar mistakes. Duplicate content can be a reason why Google crawlers stop crawling your page; the coding structure might have confused the web spider, and it doesn’t identify which version to index. This could happen because of session IDs, issues of pagination, and redundant content elements.

- To update robots.txt files

Robots. txt files are created by the website administrator to instruct search engine crawlers how to crawl pages on their website. As per Google’s Robots. txt specialization guide the format of file must be UTF-8 encoded plain text and file must be separated by CR, CR/LF or LF. Robots.txt file is very useful if you want duplicate content not to appear in SERP. Keeping the entire section of the website and search result pages private, specifying crawl delay to keep your server from being overloaded by the content loaded by the page crawler at once.

- To remove any disallow directives

Disallow links are those links which tell search engine bots to not crawl certain content or pages of your website. This should be done in robots.txt file. Since search engine crawlers will not crawl the particular content it might happen that something important or relevant content gets hidden from the crawler. This will hamper your website’s search engine ranking of pages. Therefore, it becomes crucial to remove disallow directives otherwise search engines will show ‘no information available for this page’ in search results.

- To check the loading speed of website

Your website speed can be another factor that can hamper your website performance. There can be multiple factors that can affect the website loading time. To know about your website loading time, you can visit Google PageSpeed Insight. It is important to understand that if a website doesn’t load in a specific time, users and search engine bots will get frustrated and leave your page. Google algorithm will understand that your website is not optimized enough to be used by people so it will down rank your page in search results which can reduce the traffic to your website leading to unfulfilled business objectives.

Additional information:-

Since we have talked a lot about SEO in this blog, it is crucial to understand why availing SEO services is important in the crawl depth of a website. Search engine optimization of websites helps to increase page ranking. SEO of a site helps in building the business authority in the market at a low cost; it also promotes your business and organically increases the ranking of your website, which can enhance your business visibility in the market. By creating SEO campaigns and PPC campaigns, you can achieve your goals. Refer SEO tips to increase the ranking of your webpage. Additionally, to increase your website’s visibility, optimizing websites for mobile SEO is important. Therefore, creating your SEO-friendly website helps search engine bots crawl web pages more effectively and index all your content so that your website can increase its visibility at the top of the search engine page and drive traffic for conversion.

Conclusion

The seamless operation of crawl depth might often get overlooked in website management. The level at which search engine bots crawl and index sites is unbelievable. Still, at the same time we need to understand that controlling and optimizing websites can increase the performance and ranking of the web page. A website with minimum crawl depth will help you improve the search ranking of the webpage.

You can optimize site performance by hiring agencies who can perform SEO plan & strategy, SEO audits, SEO reports, content marketing, social media marketing and PPC audits to reduce the budget of your website management and increase the traffic to your website. By performing SEO pagination, keyword cannibalization and above tips you can improve the visibility of the website content so that it becomes easy for search engines bots to crawl and find content.